OpenAI has introduced GPT-5.1-Codex-Max, a frontier agentic coding model designed for long running software engineering tasks that span millions of tokens and multi hour sessions. It is available today inside Codex in the CLI, IDE extension, cloud integration and code review surfaces, with API access planned soon.

What GPT-5.1-Codex-Max is optimised for?

GPT-5.1-Codex-Max is built on an update to OpenAI’s foundational reasoning model. This base model is trained on agentic tasks across software engineering, math, research and other domains. On top of this, GPT-5.1-Codex-Max is trained on real world software engineering workloads such as PR creation, code review, frontend coding and Q&A.

The model targets frontier coding evaluations rather than general chat. GPT-5.1-Codex-Max and the broader Codex family is recommended only for agentic coding tasks in Codex or Codex like environments, not as a drop in replacement for GPT-5.1 in general purpose conversations.

GPT-5.1-Codex-Max is also the first Codex model trained to operate in Windows environments. Its training includes tasks that make it a better collaborator in the Codex CLI, including improved behaviour when running commands and working with files under the Codex sandbox.

Compaction and long running tasks

A core feature of GPT-5.1-Codex-Max is compaction. The model still runs inside a fixed context window, but it is natively trained to work across multiple context windows by pruning its interaction history while preserving the most important information over long horizons.

In Codex applications, GPT-5.1-Codex-Max automatically compacts its session when it approaches the context window limit. It creates a fresh context window that keeps the essential state of the task, then continues execution. This process repeats until the task completes.

OpenAI reports internal evaluations where GPT-5.1-Codex-Max works independently for more than 24 hours on a single task. During these runs, the model iterates on its implementation, fixes failing tests and eventually produces a successful result.

Reasoning effort, speed and token efficiency

GPT-5.1-Codex-Max uses the same reasoning effort control introduced with GPT-5.1, but tuned for coding agents. Reasoning effort selects how many thinking tokens the model uses before committing to an answer.

On SWE-bench Verified, GPT-5.1-Codex-Max with medium reasoning effort achieves higher accuracy than GPT-5.1-Codex at the same effort while using 30% fewer thinking tokens. For non latency sensitive tasks, OpenAI introduces a new Extra High, written as xhigh, reasoning effort that lets the model think longer to reach better answers. Medium remains the recommended setting for most workloads.

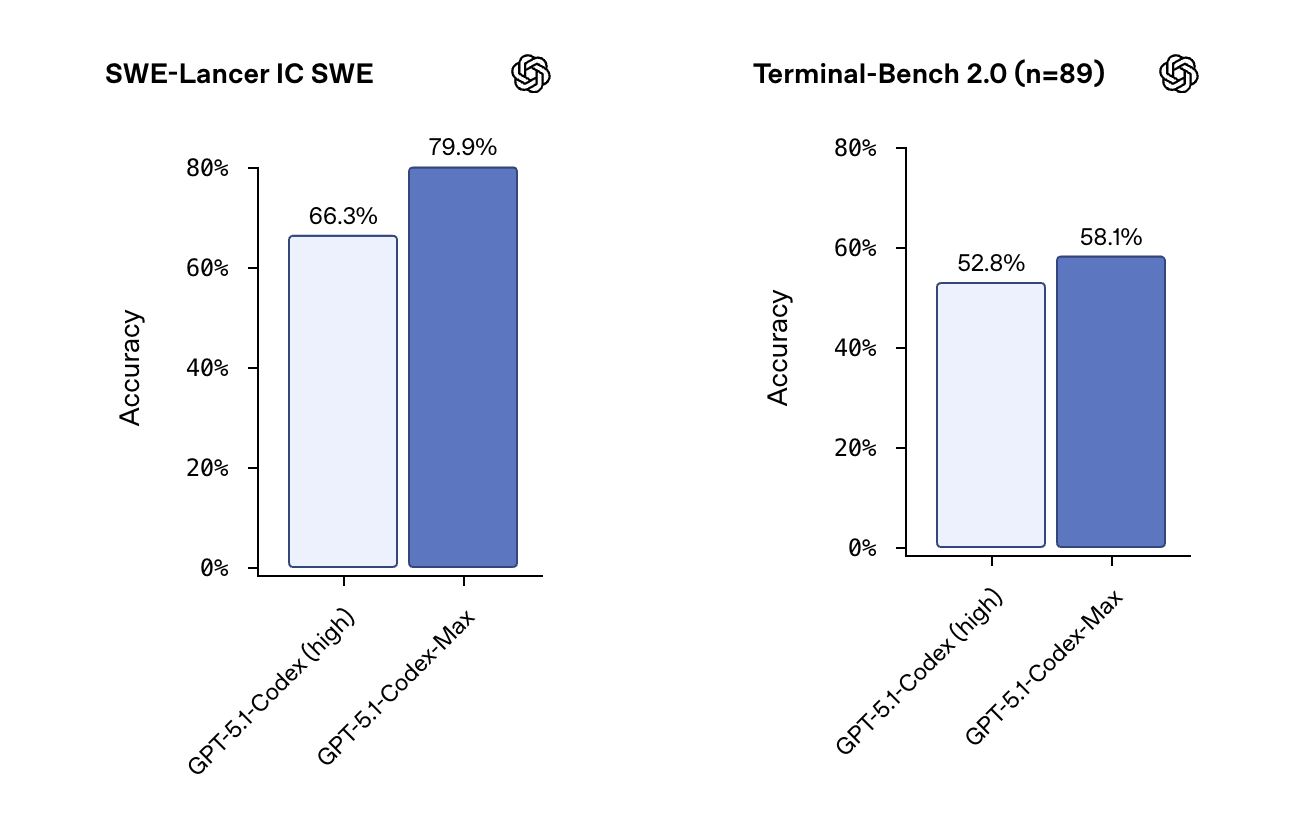

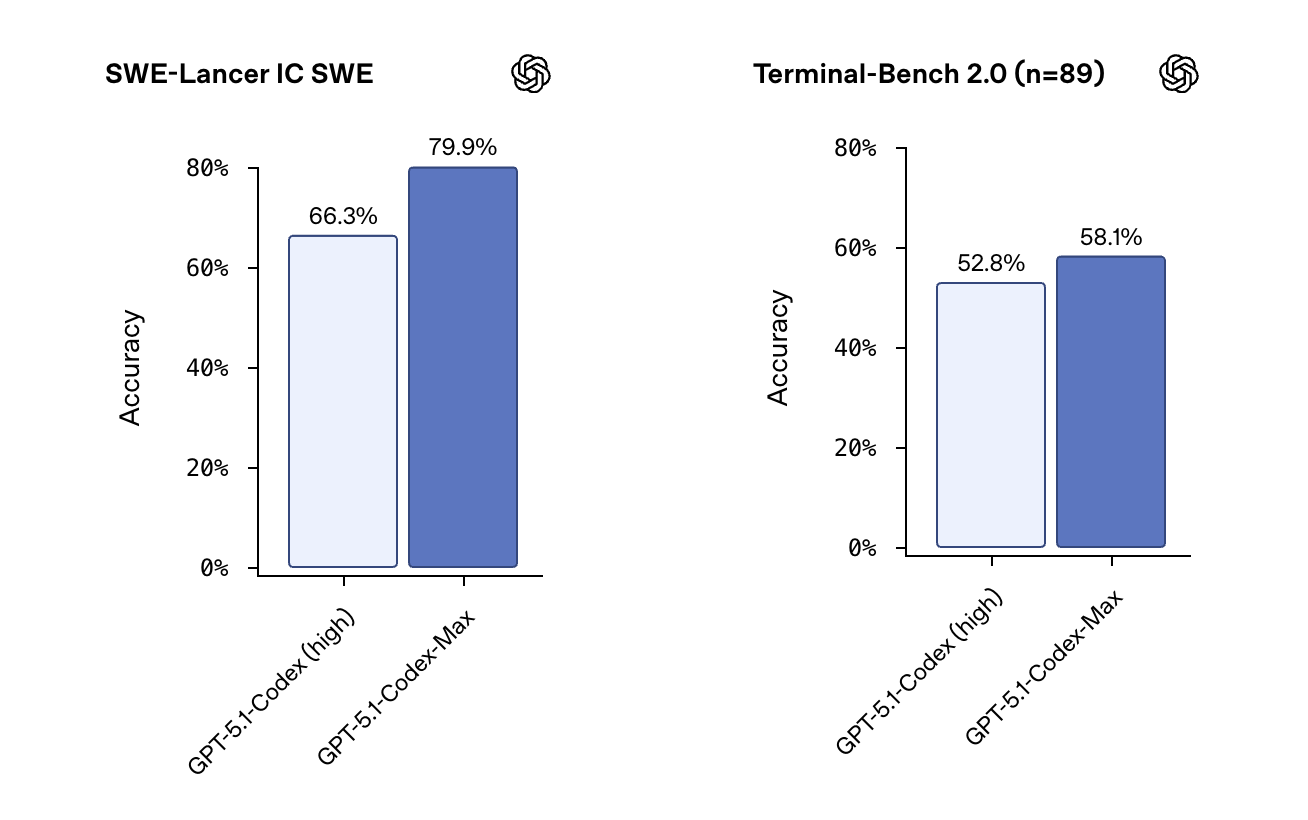

These changes show up in benchmark results. With GPT-5.1-Codex evaluated at high reasoning effort and GPT-5.1-Codex-Max at xhigh, OpenAI reports the following scores on 500 issues of SWE-bench Verified, 73.7% for GPT-5.1-Codex and 77.9% for GPT-5.1-Codex-Max. On SWE-Lancer IC SWE, the scores are 66.3% and 79.9%. On Terminal-Bench 2.0, scores are 52.8% and 58.1%. All evaluations run with compaction enabled, and Terminal-Bench 2.0 uses the Codex CLI inside the Laude Institute Harbor harness.

In qualitative tests, GPT-5.1-Codex-Max generates high quality frontend designs with similar functionality and visual quality to GPT-5.1-Codex yet at lower overall token cost, due to more efficient reasoning traces.

Key Takeaways

- GPT 5.1 Codex Max is a frontier agentic coding model built on an updated reasoning base, further trained on real software engineering tasks such as PR creation, code review, frontend coding and Q&A, and is available today across Codex CLI, IDE, cloud and code review surfaces, with API access coming later.

- The model introduces native support for long running work through compaction, where it repeatedly compresses its own history to span multiple context windows, enabling autonomous coding sessions that can continue for more than 24 hours over millions of tokens while staying on a single task.

- GPT 5.1 Codex Max retains the reasoning effort control from GPT 5.1, and at medium effort it outperforms GPT 5.1 Codex on SWE bench Verified while using about 30 percent fewer thinking tokens, with an Extra High reasoning mode for the hardest tasks.

- On frontier coding benchmarks with compaction enabled, GPT 5.1 Codex Max at xhigh effort improves SWE bench Verified from 73.7 percent to 77.9 percent, SWE Lancer IC SWE from 66.3 percent to 79.9 percent, and Terminal Bench 2.0 from 52.8 percent to 58.1 percent, compared to GPT 5.1 Codex at high effort.

GPT-5.1-Codex-Max is a clear statement that OpenAI is doubling down on long-running, agentic coding rather than short, single shot edits. Compaction, frontier coding evaluations like SWE-bench Verified and SWE-Lancer IC SWE, and explicit reasoning effort controls make this model a test case for scaling test-time compute in real software engineering workflows, not just benchmarks. The Preparedness Framework and Codex sandbox will be critical as this capability moves into production pipelines. Overall, GPT-5.1-Codex-Max is a frontier agentic coding model that operationalises long-horizon reasoning in practical developer tools.